The Camera Doesn’t Lie: The Stories of Deep Fakes

Image credit … This is…

January 19, 2023

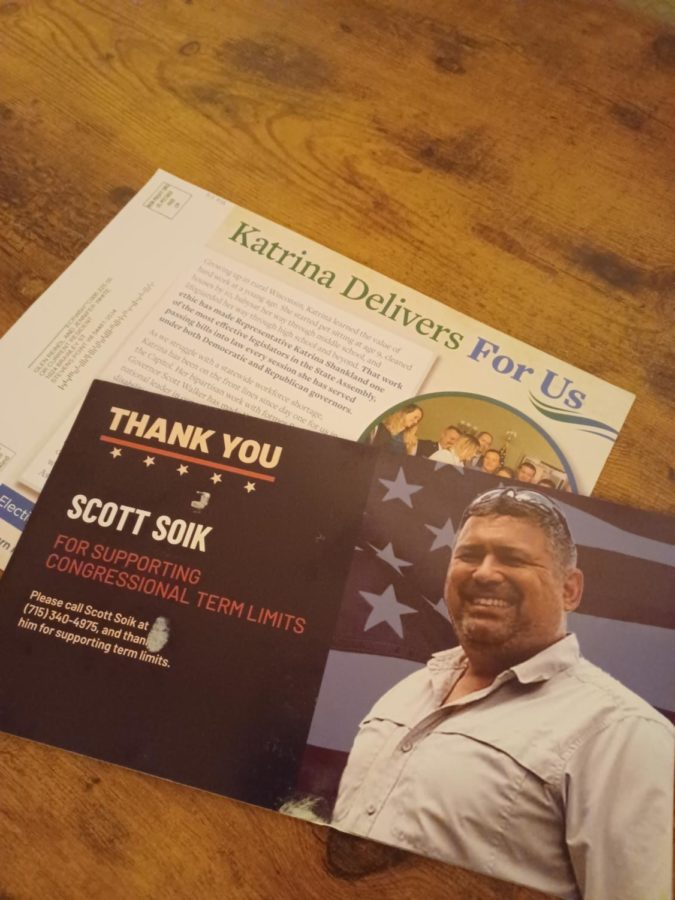

Imagine you’re in your room, soon you have to get up for school, when you see your phone light up with a notification, 100 new notifications. Confused, you open the DM’s and messages to see your friends and family had texted and called you, a few of them read, -Sweety why would you post something like this? A few more aggressive texts included slurs and threats from angry relatives. A couple acquaintances were asking if it was true because their friend told them. The instagram dm’s only worsened the blow. Men were picking you apart and listing what you could get fixed to become an attractive 10. Many of these messages came with a link to a sketchy forum. Sweating and shaking, you click the video, it takes a few seconds before you see a 30 second video with you and a man you have never seen before, start going at it. You think to yourself. What the? Who’s that guy? Am I forgetting this? Was I drunk? I mean that’s my face, but that doesn’t quite look like me.

This scenario although not exact was similar to what influencer and Youtuber Zamy Ding experienced and she took the matter to Youtube. In a desperate attempt to prove that it’s not her porn video, she posted a Youtube video of secretly entering a chat forum where not only did her video exist but so did videos of other public figures, even politicians.

Deepfake technology has been around since 1997 but as we progress into the 20th century, AI technology has gotten so efficient that many have fallen victim to the lies of video and audio recording(s). With that fact in mind, Deepfake creation technology needs laws and an individual legal and IT team dedicated to finding systems to detect videos and photos that have been edited and prevent doctored evidence from reaching, political, and legal matters.

Do You Need to be a Computer Genius to Make a Deep Fake?

To start this discussion let’s make sure we cover the basics… What is a Deep Fake? Ms. Kaster, a technology teacher at SPASH, responded by explaining… “Deepfake technology refers to the use of artificial intelligence (AI) and machine learning (ML) to create highly realistic manipulated videos and images. The technology is primarily used to superimpose one person’s face or voice onto another person’s body or audio in video and images.”

A PDF on the “Increasing Threat of Deep Fake Identities’ talks about the technology used in creating deep fakes, “In the 1990’s Adobe Photoshop became available to perform image editing software, today someone could use Deep Neural Network to perform a face swap. Some apps that are able to perform these effects are FaceShifter, FaceSwap, DeepFace Lab, Reface, snapchat and TikTok, although tik tok and snapchat have significantly lower advanced computerized requirements.” Which goes to show that the technology to create a deepfake is available online if you search enough for it.

However, Ms. Kaster explains that apps only go so far to create a sophisticated deepfake, “It depends on the complexity of the edits and the specific software or tools being used. Some apps and technologies, such as those I mentioned earlier, are designed to be relatively easy to use and can produce convincing results with minimal knowledge of video editing or computer skills. However, creating a video or picture that is completely believable to online users can be quite difficult and may require advanced knowledge and experience with video editing software and techniques.”

How an Edited Audio Cost a Company $250 Thousand

With the discussion of deepfake also comes the reality of scammers, but not only can images and videos be altered, but so can audio.

In the Journal of Wall street an article was published in 2019 describing the cybercrime committed against the UK British Energy company, the story goes that the British company was bought out by the German Company (parent company), and one day someone called the Executive at the British company with the CEO’s voice of the parent company stating, that they needed the British company to wire 250 thousand Dollars into a Hungarian account for business purpose a. The Executive wired the money and soon after another call came through from the German company asking for another wire. The executive had IT look into it and found out the call was a deepfake. And still today the Scammer was never caught.

Another fake audio recording was listed in “The Increasing Threat of Deepfake Identifies” talking about how, “In the lead-up to UK elections in 1983, members of the British anarcho-punk band Crass spliced together excerpts from speeches by Margaret Thatcher and Ronald Reagan to create a fake telephone conversation between the leaders, in which they each made bellicose, politically damaging statements” And although this telephone conversation was a lot less sophisticated than the call made to the UK Energy Company, this still affected the direction and campaigns of UK politics.

Another example, although hypothetical, was suggested by Professor Danielle Citron, to the House Permanent Select Committee on Intelligence in 2019 involving a legal case. “In this scenario a malignant actor produces a deep fake video of the Commissioner of the Baltimore Police Department (BPD) endorsing the mistreatment of Freddie Gray, an African American who died in police custody in 2015.34 … first performs research on the Baltimore Police Department to gather still images, video, and audio of the Commissioner to use as training data. This could come from past press conferences and/or news stories. Next, the malignant actor uses the gathered information to train an AI/ML model to mimic the likeness and voice of the Commissioner. With a trained model, the malignant actor creates a deep fake video of the police Commissioner endorsing the mistreatment of Mr. Gray, staged as a private discussion to lend credibility to the statements, which might also include inflammatory remarks. The malignant actor could then anonymously post the video to social media sites and draw attention to it using fake social media accounts.”

Unfortunately the proposed scenario isn’t far from reality and it wouldn’t take much to find a deep fake account and personally request a video be made from police recorded tapes. It does cost a few thousand to make such a video but in a severe case where you would spend decades locked away, this would be your real- life monopoly get out of jail card.

What About Disney?

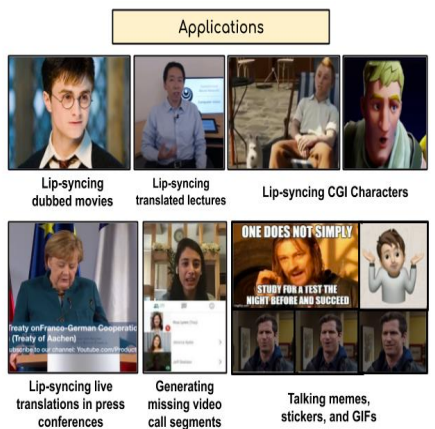

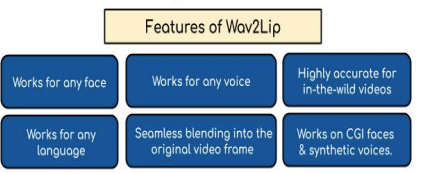

It’s pretty clear by the first two paragraphs the dangers and consequences of deep fakes, but what about the good that deep fakes can do? Some benefits that Deep fakes have to offer are especially apparent in the entertainment industry. In an image from PDF ‘Increasing Threat of Deep Fake Identities’, it is shown the multitude of applications that corporations such as Disney use deep fake technology, including lip syncing in dubbed movies and CGI characters. And in education and politics, deep fakes can lip sync translated lectures, press conferences, and help fill in gaps and pauses during bad video and audio connection.

Even Ms. Kaster states, “Edited videos and pictures can be used for a variety of purposes. Some people use them for entertainment, such as creating funny memes or videos. Others use them for artistic expression, such as creating music videos or short films. Some people use them for personal use, like editing out blemishes in a personal photo, or creating videos of special events like weddings or birthday parties. So yes these benefits are an advancement in our day to day lives and make information more accessible than ever before. However, in a podcast named ‘Rotten Mango’, the speaker Stephanie Soo and PDF ‘Increasing Threat of Deep Fake Identities’ showed that 90-96% of deep fakes are non-consensual pornography. Therefore it’s safe to say that deep fakes are being used for more ill intentions than good.

Can We Even Stop This?

In the years to come there needs to be steps taken to decode and stop the dangers that come about with deep fakes. A bill passed in 2019 called the Deep Fake report Act of 2019, requires the Science and Technology Directorate in Homeland security to report the current state of forgery technology. This included AI or machine learning techniques. Ms. Kaster also suggests that government and tech companies should produce collaborations, as well as develop counter software, also known as detection algorithms. Because as Deep fakes become more efficient so needs to be the counter measures in decrypting false and harmful content.

Final Thoughts

In conclusion, deepfakes both have pro’s and con’s. The cons include the possibility of edited photos and videos affecting legal investigations, spreading misinformation, impersonation, non- consensual pornography, the list goes on! While Deep Fakes can also be used for education and entertainment purposes, the vast majority of deep fakes are illegal and diabolical. Awareness needs to be raised about this type of technology and we need more bills and regulations passed to monitor the activity, and stop situations like the UK British Energy company, or even like what happened to Samy Ding. Deep Fakes aren’t just something that happens to celebrities, there’s a very scary idea that this technology will spike in the US and you could see a classmate, your parental figure, or even yourself on sexually explicit content that you know you didn’t make. But your mom, dad, boyfriend, girlfriend, etc. How are they able to tell? You’ve always heard “The camera doesn’t lie,” but the photographer does.